Analysing Honeypot Data with Sentinel

Carrying on my series of posts around Azure Sentinel, I wanted to see if I could use it to process logs from a honeypot and produce useful information. Eventually this could be extended to provide additional context to alerts and even train ML models within Sentinel.

Having played with honeypots a little in the past, I wanted to use something quick and easy to set up; since I wanted to analyze high-level data at scale rather than digging into specific attacks, there was no need for a high-interaction honeypot. HoneyDB seemed to meet these requirements so I went ahead and installed it on a new EC2 instance running Amazon Linux.

I won’t go into details of installing the honeypot agent since there’s adequate documentation for that. Once it’s installed it requires little to no configuration to get started, I added my HoneyDB API keys, enabled local logging, disabled some services that I wasn’t so interested in, added an allow any/any rule on the security group and watched the logs start to roll in.

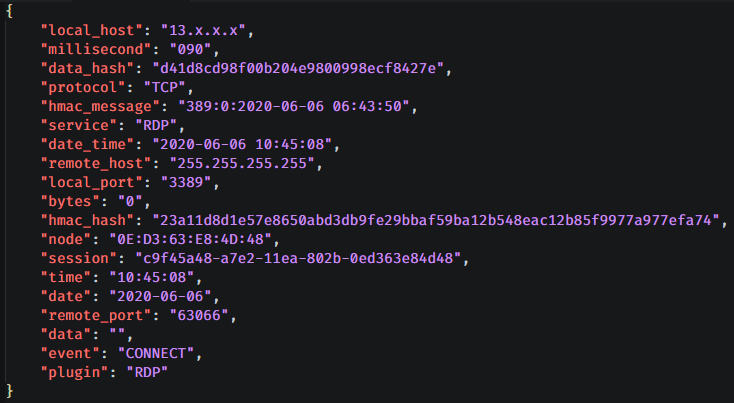

/var/log/honey/rdp.log (IPs Obfuscated)

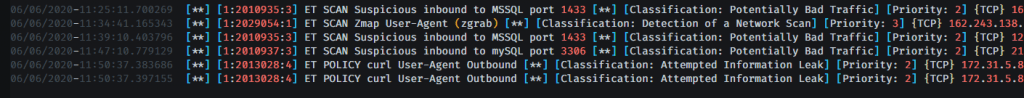

In order to catch specific exploits and attacks I wanted something that would analyze the network traffic in greater detail so I installed Suricata and added the Emerging Threats Open rules.

/var/log/suricata/fast.log – we’ll be ingesting eve.json which contains more details but fast.log is more human readable

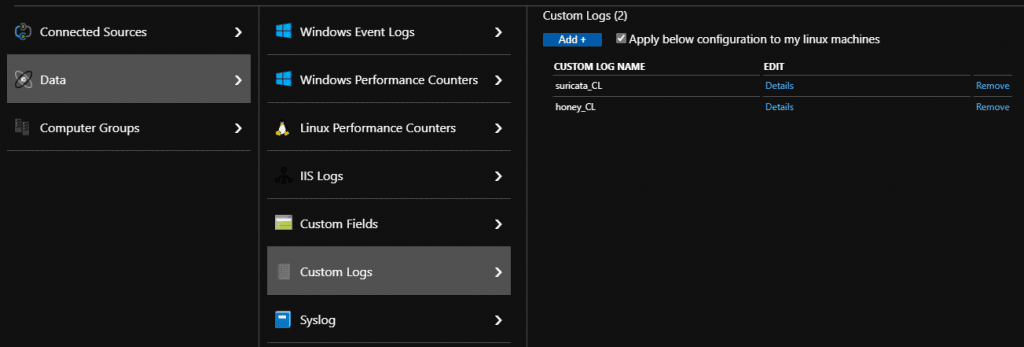

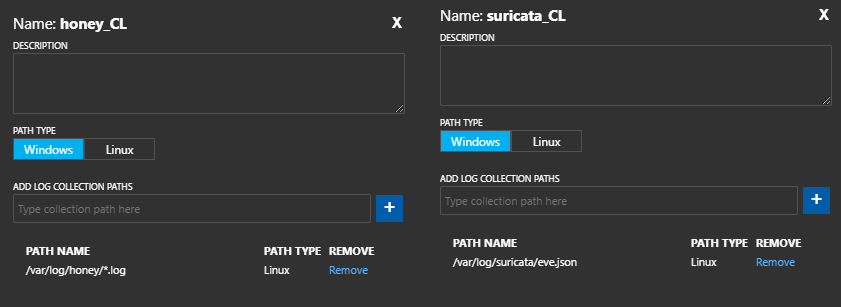

Now that I had JSON logs from the honeypot-agent and from Suricata I installed the Microsoft OMS agent and created a couple of custom file definitions in the Log Analytics dashboard. Since Log Analytics doesn’t parse files on ingest it’s possible to use a wildcard for the honeypot logs even though each service contains different fields. We can parse these differently when we come to query them.

Log Analytics contains a handy parse_json() function which takes the RawData field and turns it into a dynamic field evaluate bag_unpack() can turn this into separate columns so we don’t need to write any of the parsing logic manually.

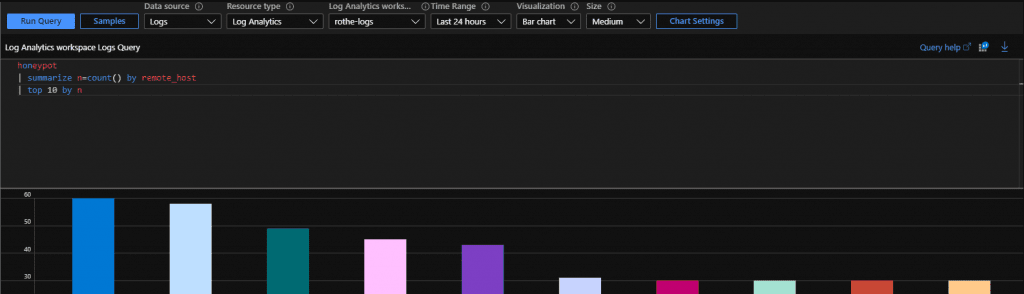

To start with I wrote a couple of simple queries to parse the JSON logs and saved them as functions so that I could reuse them later.

honey_CL

| extend logdata=parse_json(RawData)

| evaluate bag_unpack(logdata)

| project-away RawData

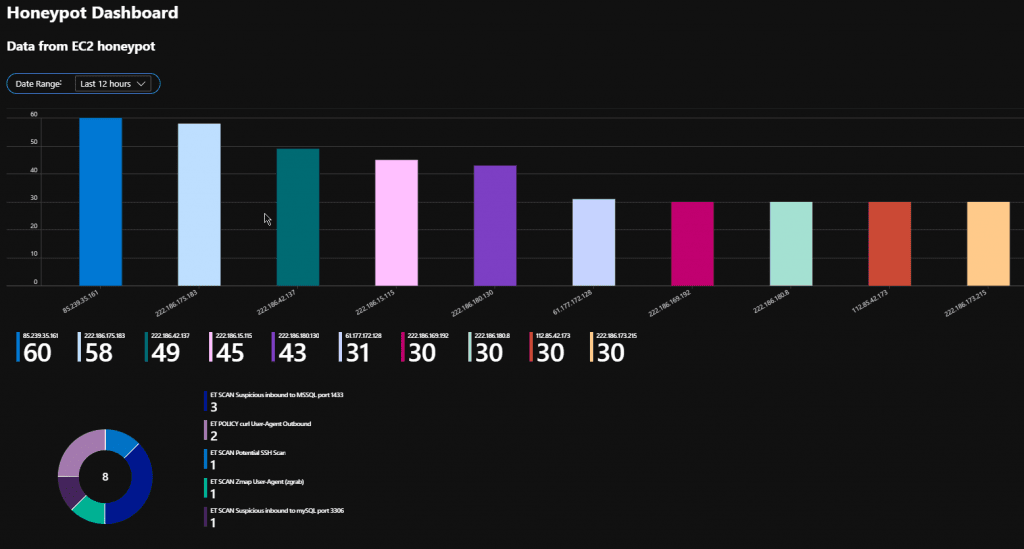

Then I used these functions in an Azure Workbook to produce a nice dashboard with a parameter for adjusting the time-scale that’s being inspected.

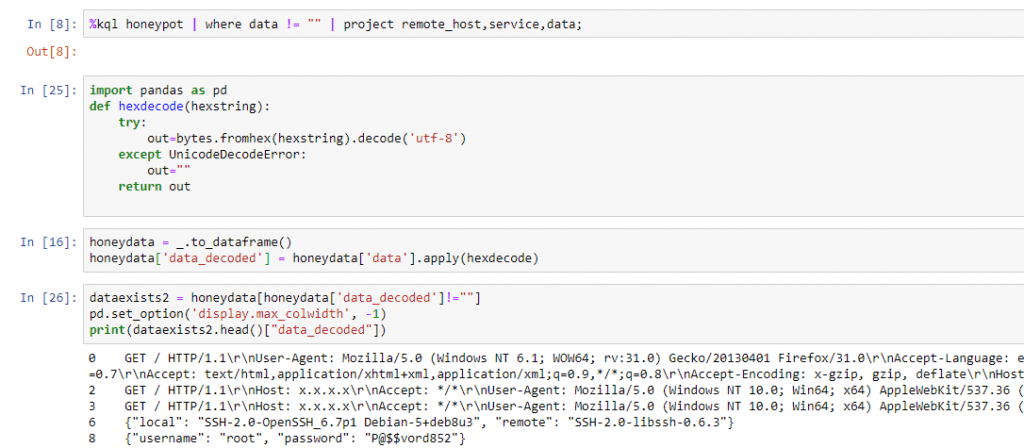

For some services, the honeypot records more data such as login attempts, this is recoded as a hexadecimal string. Frustratingly KQL doesn’t provide a function to convert hex to ascii at the moment, one option would be to do some pre-processing on the server before sending the logs but in this case I used Azure’s Jupyter notebooks to do the analysis.

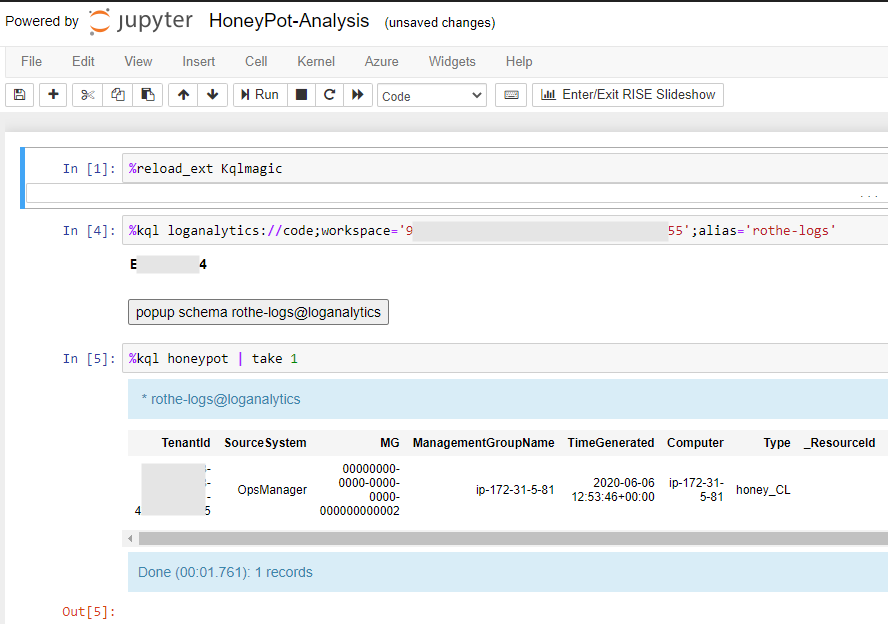

The first cell in the notebook loads the KQL extension that will allow us to directly query the logs, It then connects to the Log Analytics Workspace using an interactive login prompt and tests the connection by grabbing a single record from our honeypot function.

I then wrote a quick function that attempts to convert the hex to ascii and applied it to the dataframe containing the results which added a new column with the decoded data. The output then shows records where the decoding has succeeded and the HTTP or SSH data is returned. As more data is collected this will allow for analysis of common credentials and potentially linking addresses to specific botnets/malware.

Intelligence gathered from this data could also be used to take action, addresses that have been seen hitting a honeypot address repeatedly could be blocked on a firewall using an Azure Logic App or additional verification could be requested in an application such as a Captcha.

Further Insights

Azure Service Tiles

Our best-practice, accelerated approach to Azure adoption in-line with Microsoft’s Cloud Adoption Framework (CAF).

Azure News

Enjoyed the latest Azure News? Grab a coffee and have a read of last month’s newsletter to make sure you didn’t miss anything.

Got 2 minutes to spare?

Grab a coffee and take a look at our selection of short videos focused on Microsoft’s Cloud Adoption Framework (CAF).

Martin Rothe

Martin is a Security Engineer working in the N4Stack Security Operations Center (SOC) – His job involves designing and deploying security solutions for customers, responding to security incidents, and investigating new technologies and techniques to protect users, networks and data from the bad guys.

Want to find out more about Martin? Click here